Diagnostic classification

models

A brief introduction

Conceptual foundations

- Traditional assessments and psychometric models measure an overall skill or ability

- Assume a continuous latent trait

Traditional methods

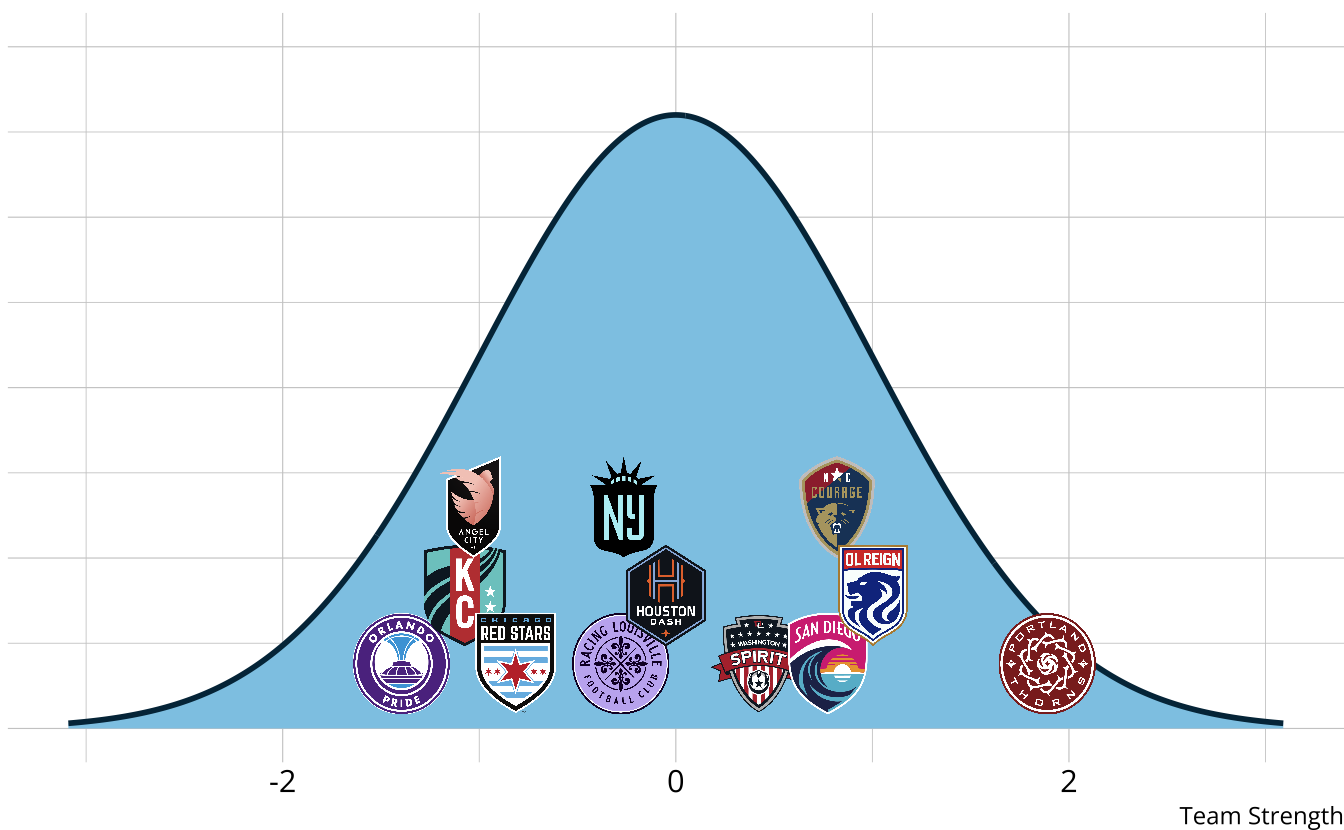

- The output is a weak ordering of teams due to error in estimates

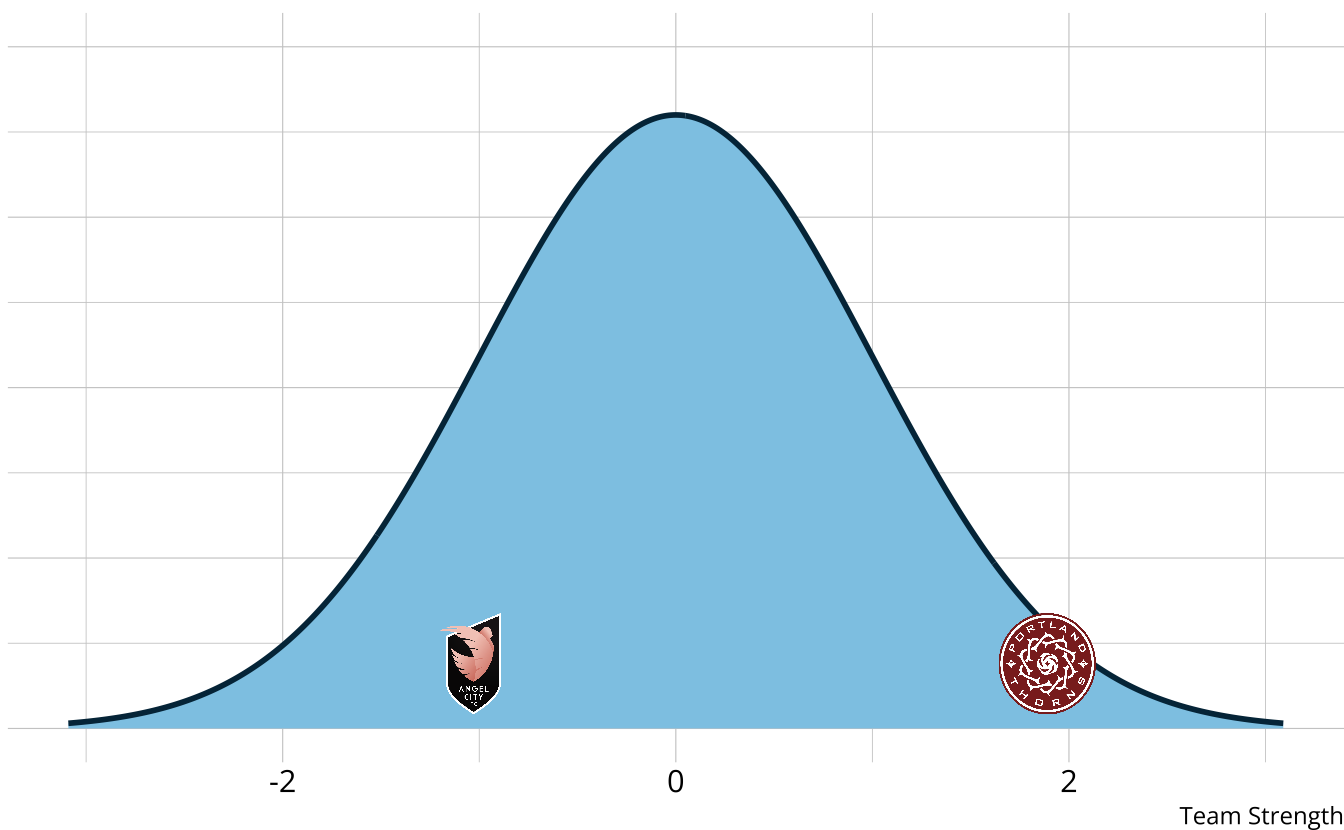

- Confident Portland Thorns are the best

- Not confident who is second best (OL Reign, North Carolina Courage, San Diego Wave)

- Limited in the types of questions that can be answered.

- Why is Kansas City so low?

- What aspects are teams competent/proficient in?

- How much skill is “enough” to pass?

Soccer example

- Rather than measuring overall team strength, we can break soccer down into set of skills or attributes

- Finishing

- Possession

- Defending

- Goalkeeping

- Attributes are categorical, often dichotomous (e.g., proficient vs. non-proficient)

Diagnostic classification models

- DCMs place individuals into groups according to proficiency of multiple attributes

| finishing | possession | defending | goalkeeping | |

|---|---|---|---|---|

Answering more questions

- Why is Kansas City so low?

- Poor finishing, defending, and goalkeeping

- What aspects are teams competent/proficient in?

- DCMs provide classifications directly

Diagnostic psychometrics

- Designed to be multidimensional

- No continuum of student achievement

- Categorical constructs

- Usually binary (e.g., master/nonmaster, proficient/not proficient)

- Several different names in the literature

- Diagnostic classification models (DCMs)

- Cognitive diagnostic models (CDMs)

- Skills assessment models

- Latent response models

- Restricted latent class models

When are DCMs appropriate?

Success depends on:

- Domain definitions

- What are the attributes we’re trying to measure?

- Are the attributes measurable (e.g., with assessment items)?

- Alignment of purpose between assessment and model

- Is classification the purpose?

Example applications

- Educational measurement: The competencies that student is or is not proficient in

- Latent knowledge, skills, or understandings

- Used for tailored instruction and remediation

- Psychiatric assessment: The DSM criteria that an individual meets

- Broader diagnosis of a disorder

When are DCMs not appropriate?

When the goal is to place individuals on a scale

DCMs do not distinguish within classes

| finishing | possession | defending | goalkeeping | |

|---|---|---|---|---|

Conceptual foundation summary

- DCMs are psychometric models designed to classify

- We can define our attributes in any way that we choose

- Items depend on the attribute definitions

- Classifications are probabilistic

- Takes fewer items to classify than to rank/scale

- DCMs provide valuable information with more feasible data demands than other psychometric models

- Higher reliability than IRT/MIRT models

- Naturally accommodates multidimensionality

- Complex item structures possible

- Criterion-referenced interpretations

- Alignment of assessment goals and psychometric model

Statistical foundations

Statistical foundation

Latent class models use responses to probabilistically place individuals into latent classes

DCMs are confirmatory latent class models

- Latent classes specified a priori as attribute profiles

- Q-matrx specifies item-attribute structure

- Person parameters are attribute proficiency probabilities

Terminology

Respondents (r): The individuals from whom behavioral data are collected

- For today, this is dichotomous assessment item responses

- Not limited to only item responses in practice

Items (i): Assessment questions used to classify/diagnose respondents

Attributes (a): Unobserved latent categorical characteristics underlying the behaviors (i.e., diagnostic status)

- Latent variables

Diagnostic Assessment: The method used to elicit behavioral data

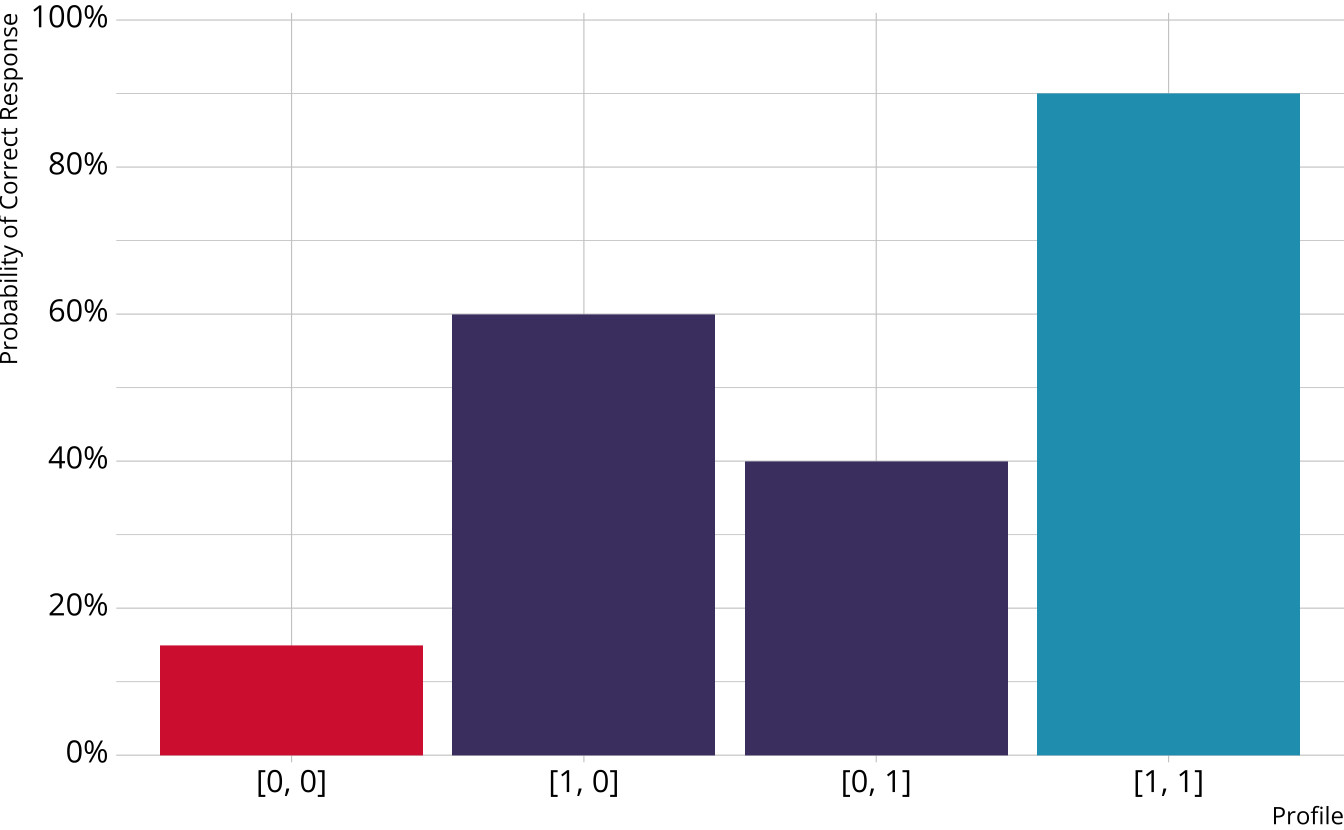

Attribute profiles

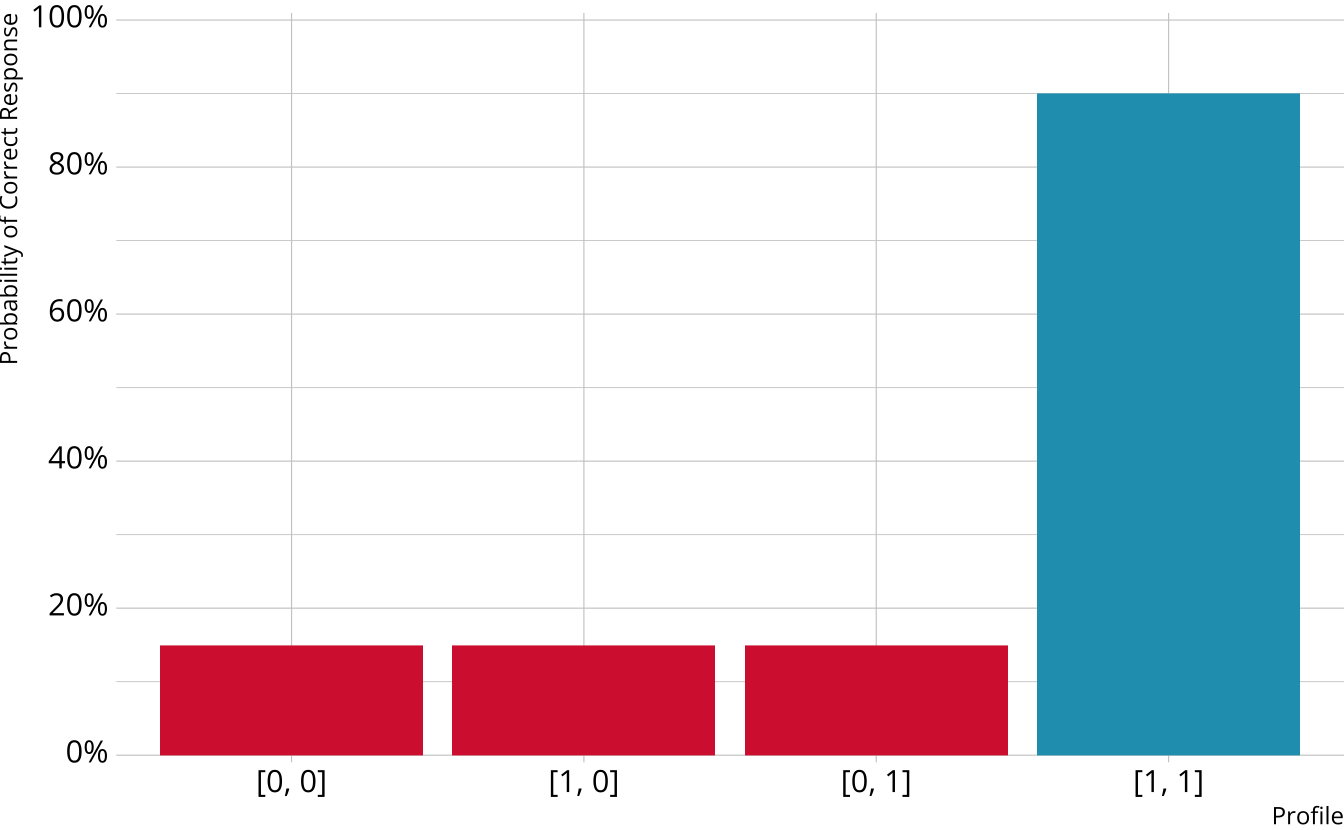

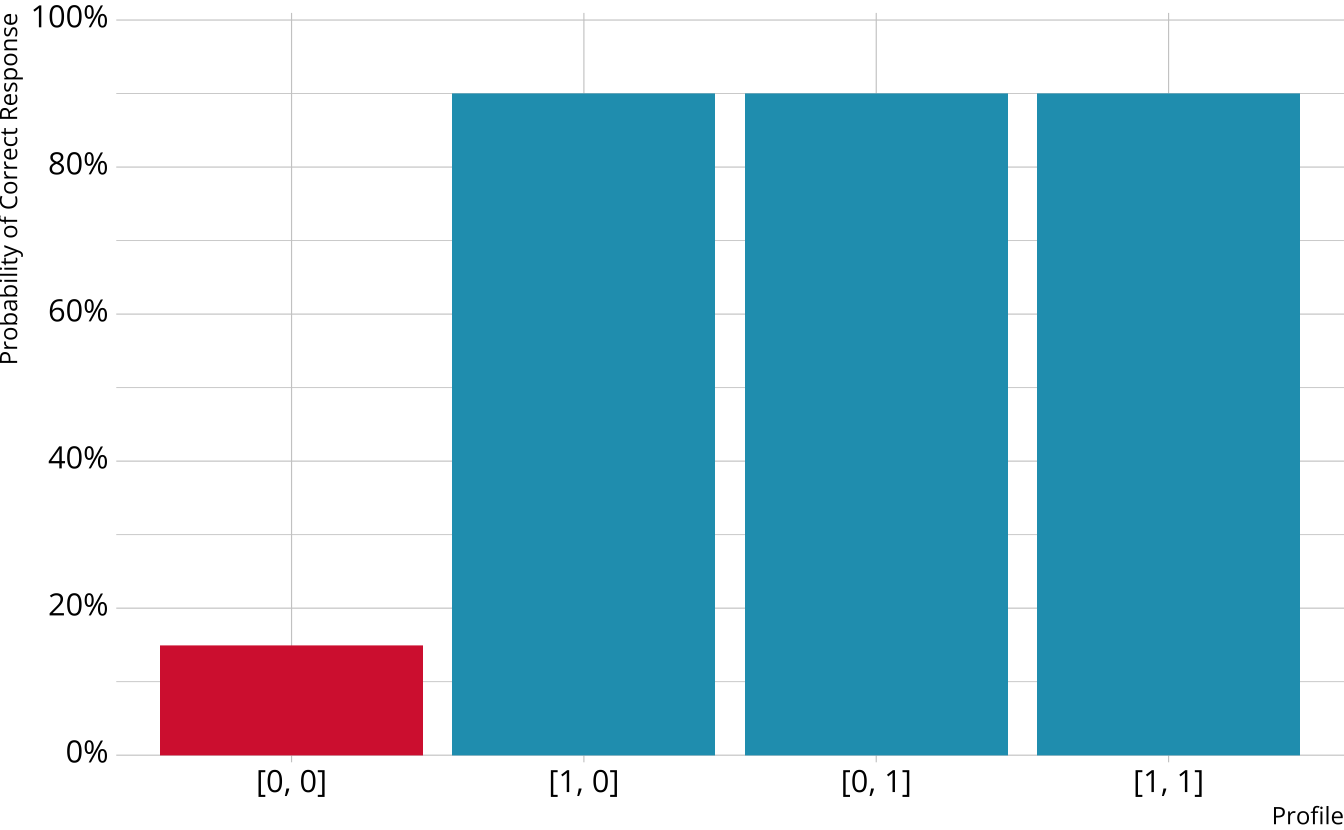

With binary attributes, there are \(2^A\) possible profiles

Example 2-attribute assessment:

[0, 0]

[1, 0]

[0, 1]

[1, 1]

DCMs as latent class models

\[ \color{#D55E00}{P(X_r=x_r)} = \sum_{c=1}^C\color{#009E73}{\nu_c} \prod_{i=1}^I\color{#56B4E9}{\pi_{ic}^{x_{ir}}(1-\pi_{ic})^{1 - x_{ir}}} \]

Item response probabilities

Numerous DCMs have been developed over the years

Each DCM makes different assumptions about how attributes proficiencies combine/interact to produce an item response

Non-compensatory DCMs

Must be proficient in all attributes measured by the item to provide a correct response

Deterministic inputs, noisy “and” gate (DINA; de la Torre & Douglas, 2004)

Compensatory DCMs

Must be proficient in at least 1 attribute measured by the item to provide a correct response

Deterministic inputs, noisy “or” gate (DINO; Templin & Henson, 2006)

General DCMs

Different response probabilities for each class (partially compensatory)

Log-linear cognitive diagnostic model (LCDM; Henson et al., 2009)

This will be our focus

Simple structure LCDM

Item measures only 1 attribute

\[ \text{logit}(X_i = 1) = \color{#D7263D}{\lambda_{i,0}} + \color{#219EBC}{\lambda_{i,1(1)}}\color{#009E73}{\alpha} \]

Subscript notation

- i = The item to which the parameter belongs

- e = The level of the effect

- 0 = intercept

- 1 = main effect

- 2 = two-way interaction

- 3 = three-way interaction

- Etc.

- \((\alpha_1,...)\) = The attributes to which the effect applies

- The same number of attributes as listed in subscript 2

Complex structure LCDM

Item measures multiple attributes

\[ \text{logit}(X_i = 1) = \color{#D7263D}{\lambda_{i,0}} + \color{#4B3F72}{\lambda_{i,1(1)}\alpha_1} + \color{#9589BE}{\lambda_{i,1(2)}\alpha_2} + \color{#219EBC}{\lambda_{i,2(1,2)}\alpha_1\alpha_2} \]

Defining DCM structures

Attribute and item relationships are defined in the Q-matrix

Q-matrix

- I \(\times\) A matrix

- 0 = Attribute is not measured by the item

- 1 = Attribute is measured by the item

The LCDM as a general DCM

So called “general” DCM because the LCDM subsumes other DCMs

Constraints on item parameters make LCDM equivalent to other DCMs (e.g., DINA and DINO)

- Interactive Shiny app: https://atlas-aai.shinyapps.io/dcm-probs/

- DINA

- Only the intercept and highest-order interaction are non-0

- DINO

- All main effects are equal

- All two-way interactions are -1 \(\times\) main effect

- All three-way interactions are -1 \(\times\) two-way interaction (i.e., equal to main effects)

- Etc.

The rest of today

Diagnostic classification models

A brief introduction